Synthetic data tools are transforming research by offering privacy-safe, cost-effective, and fast solutions for generating datasets. These tools replicate real-world data patterns without exposing sensitive information, making them essential for industries navigating strict privacy regulations like CCPA, GDPR, and HIPAA.

Here’s a quick rundown of the top synthetic data tools for 2025 and 2026:

- Syntellia: AI-driven platform delivering insights in 30–60 minutes with 90% accuracy, cutting costs by 90%.

- YData Fabric: Focuses on privacy-compliant data synthesis and pipeline orchestration for AI development.

- Tonic.ai: Dual-engine system for generating synthetic data and masking sensitive production data.

- Synthetic Data Vault (SDV): Open-source Python framework for generating datasets with local infrastructure.

- Gretel: Developer-friendly tool for creating text, tabular, and time-series datasets with built-in metrics.

- Mostly AI: Privacy-first platform for generating statistically identical datasets for AI training.

- Simulacra Synthetic Data Studio: Combines Causal AI with synthetic data for real-time scenario modeling.

- Lemon AI: Versatile platform supporting various data types with strong regulatory compliance.

- brewdata: Streamlines dataset creation, reducing preparation time by 70% while boosting model accuracy.

- Synthera AI: Emerging tool requiring careful evaluation due to limited public information.

These platforms cater to diverse research needs, from consumer insights to AI model training, while ensuring compliance with privacy laws and reducing reliance on real-world data.

1. Syntellia

In today’s fast-paced research environment, managing data efficiently, securely, and affordably is more important than ever. Syntellia steps in as an AI-powered platform designed for consumer, employee, and policy research. Its standout feature? The ability to create virtual respondents that mimic real human behavior patterns, delivering insights with an impressive 90% accuracy.

Syntellia completely redefines timelines for research. While traditional studies can take 6–12 weeks, this platform delivers results in just 30–60 minutes by instantly surveying its AI-driven synthetic respondents.

Another major advantage is the cost savings. Traditional research projects typically cost between $50,000 and $250,000, but Syntellia’s annual SaaS pricing model slashes costs by 90%. This setup allows businesses to conduct unlimited research without worrying about per-project fees, making it an ideal solution for companies that regularly rely on market insights.

Beyond cost and speed, Syntellia offers unmatched flexibility. Researchers can access unlimited audiences and make real-time adjustments to their questions without the delays of recruiting participants. This adaptability enables quick decision-making and keeps workflows moving smoothly.

The platform also provides a comprehensive toolkit that includes surveys, focus groups, conjoint analysis, and A/B testing. This all-in-one approach eliminates the need to juggle multiple tools or vendors, streamlining the research process for organizations.

Syntellia’s versatility extends across various research needs, whether for consumers, employees, or policy-related insights. Its ability to serve multiple departments and use cases makes it a go-to solution for diverse organizational challenges.

Finally, Syntellia operates with a zero privacy risk model, meaning it doesn’t rely on real respondents. This eliminates regulatory concerns, such as those tied to CCPA, ensuring compliance without compromising on data quality.

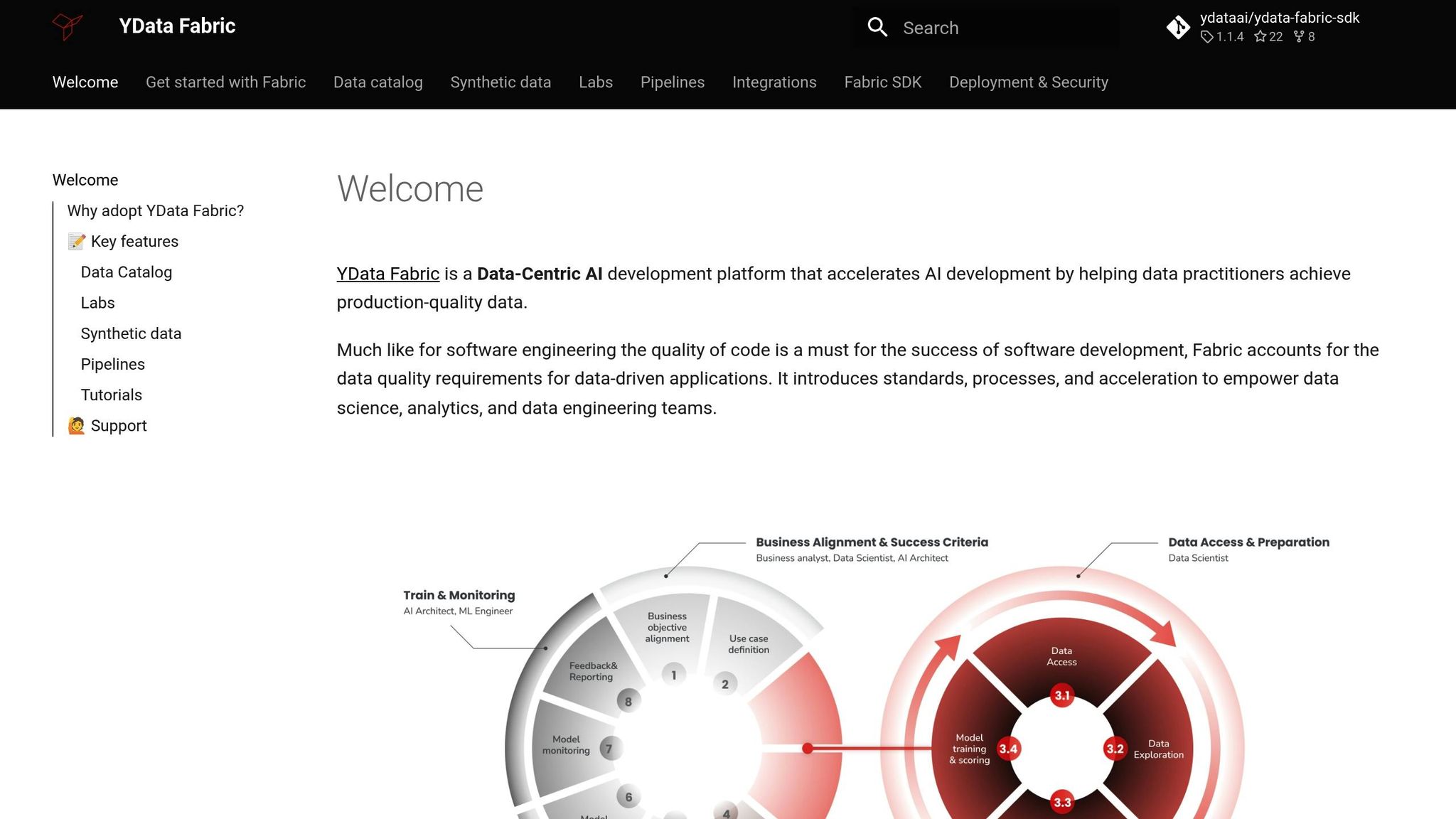

2. YData Fabric

YData Fabric combines data profiling with synthesis to create high-quality training datasets that retain essential statistical properties while adhering to strict privacy regulations. By automatically analyzing the structure of existing datasets, the platform generates synthetic alternatives that mirror the original data without compromising privacy.

This approach not only safeguards data integrity but also addresses privacy concerns early in the data lifecycle. It identifies and removes personally identifiable information (PII) from staging datasets, aligning seamlessly with modern lakehouse governance practices.

For research teams handling sensitive, regulated data, YData Fabric offers features compliant with GDPR and HIPAA. These synthetic datasets maintain the analytical value of the original data while significantly reducing privacy risks. The platform also simplifies pipeline orchestration, speeding up AI development while ensuring compliance with regulatory requirements.

3. Tonic.ai

Tonic.ai stands out as a powerful tool for working with synthetic data, offering a dual-engine system that focuses on both generating new data and masking sensitive production data. Its approach ensures secure environments for research while maintaining the integrity of the data for accurate analysis.

The platform includes three key engines: Fabricate, which generates relational synthetic databases and mock APIs for quick prototyping; Structural, which masks sensitive production data while preserving critical relationships; and Textual, which redacts and synthesizes unstructured text from sources like surveys and interviews. This setup allows teams to work with realistic datasets, whether they lack production data or need to safeguard sensitive information.

Tonic.ai uses AI-powered synthesis to automatically identify sensitive data types and apply suitable protection measures. For more tailored needs, users can configure custom sensitivity rules. The platform also supports de-identification methods like the Safe Harbor approach, making it particularly useful for medical research that involves protected health information (PHI). Throughout, Tonic.ai ensures that the data remains useful for analysis without compromising privacy.

With SOC2 Certification under its belt, Tonic.ai provides flexible deployment options. Teams can opt for self-hosted instances to maintain full control or choose a cloud-based setup for easier management. This adaptability makes it an excellent choice for database testing, as it allows research teams to validate their data pipelines without risking exposure of sensitive production data.

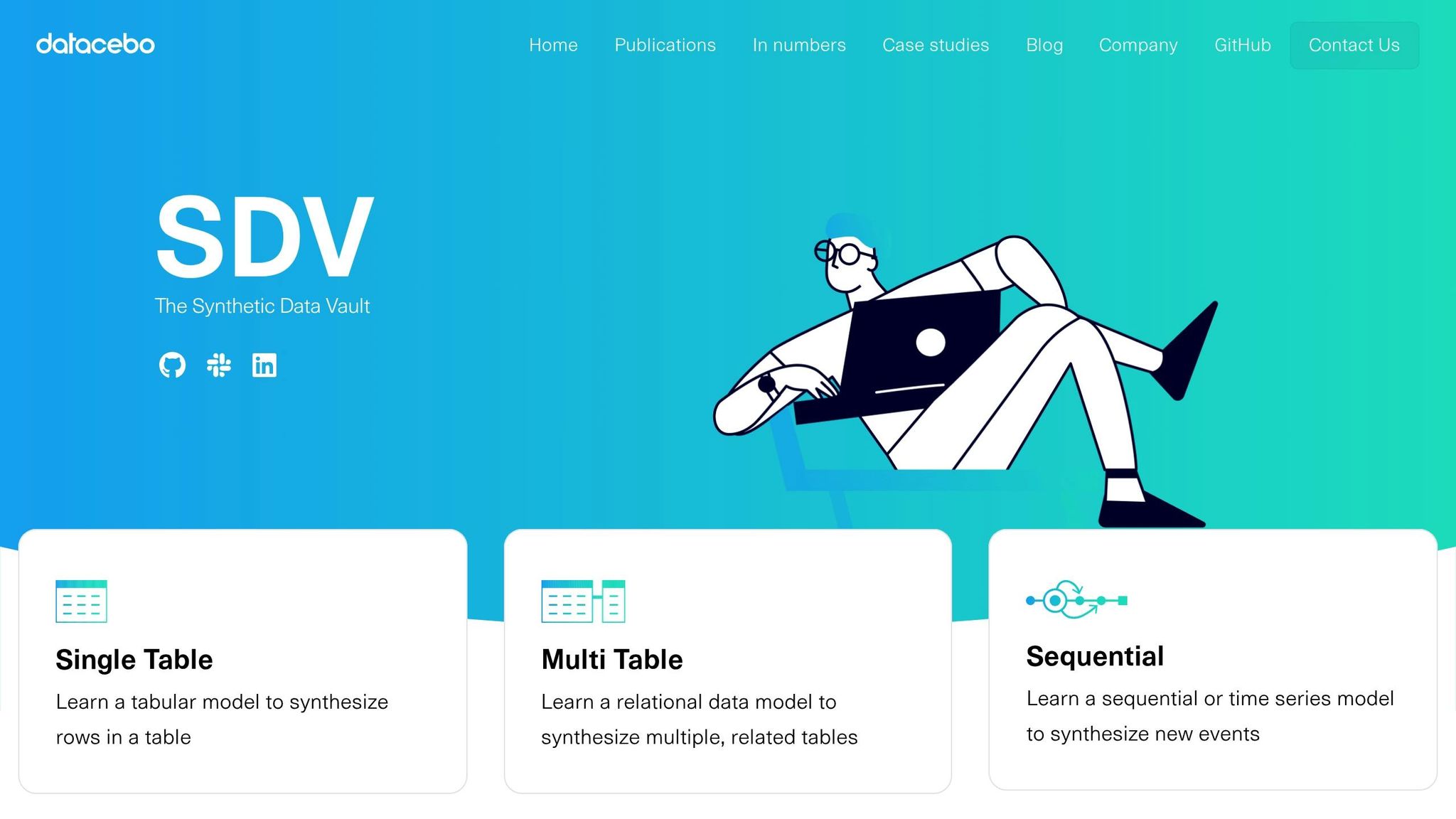

4. Synthetic Data Vault (SDV)

The Synthetic Data Vault (SDV) is an open-source Python framework designed to generate synthetic data for a wide range of research applications. Unlike proprietary tools, SDV gives researchers direct control over the data generation process and can operate in secure, air-gapped environments, making it a flexible choice for diverse needs.

SDV supports various synthesis methods, including single-table, sequential (time-series), and relational synthesis for complex databases. This versatility allows it to handle a broad spectrum of data types effectively.

Its toolkit includes powerful synthesizers like copula models, CTGAN, and TVAE, enabling users to adapt their approach based on the complexity of their datasets.

One of SDV's standout features is its ability to run entirely on standard computing infrastructure. By focusing on local, CPU-friendly training, it eliminates the need for costly cloud services or specialized hardware, making it accessible for research teams working with tight budgets. This makes SDV particularly appealing in settings where cost and infrastructure are significant considerations.

To ensure the quality and privacy of synthetic data, SDV provides companion libraries for evaluation and benchmarking. These tools allow researchers to perform thorough, reproducible assessments of data quality and privacy.

With a streamlined API and comprehensive documentation, SDV integrates smoothly into existing workflows. Research teams can quickly incorporate its data generation capabilities into their scripts or automated processes without extensive setup.

That said, SDV does come with some challenges. It requires technical expertise to operate effectively, and working with large, complex models involving multiple table constraints can lead to longer generation times. Additional privacy measures may also be necessary in such cases.

Despite these challenges, SDV shines in creating complex, referentially consistent datasets, making it an excellent tool for building sandbox environments and supporting machine learning workflows. Research teams can use SDV to test algorithms, train models, and conduct studies - all while reducing privacy risks.

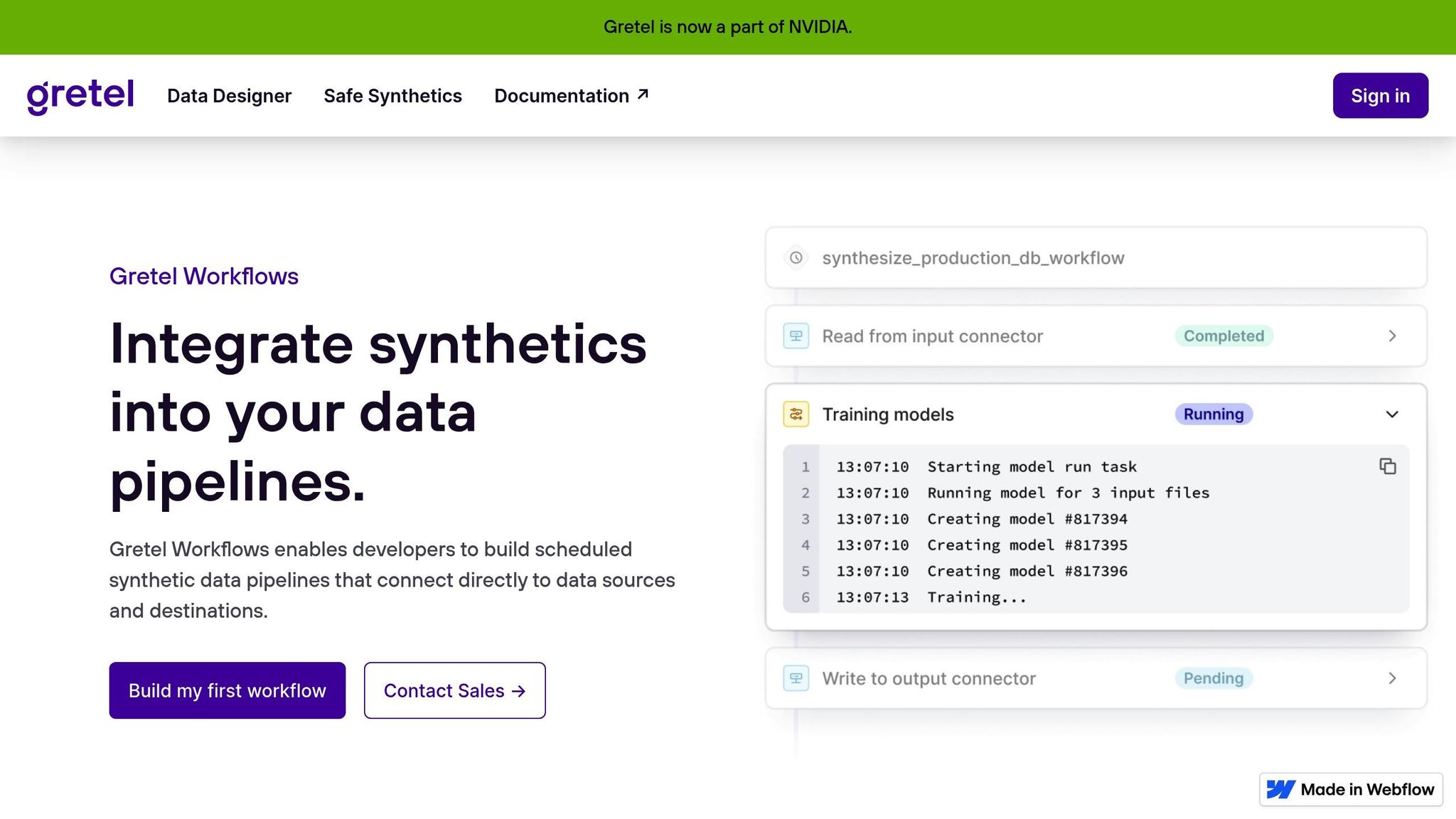

5. Gretel

Gretel is a cloud-based platform designed for developers and AI engineers to create synthetic, anonymized datasets while preserving key data relationships.

What sets Gretel apart is its ability to generate various types of data, including text, tabular, and time-series formats. Its realistic synthetic text generation has made it a valuable tool for advancing NLP research, gaining significant traction in 2025.

The platform offers fine-tuning options, enabling researchers to customize synthetic data to match the specific needs of their domain. This ensures the generated data reflects the unique characteristics of their field.

Gretel’s API-driven design makes it easy to integrate into existing machine learning workflows. This feature is particularly appealing to startups and agile teams looking to experiment and iterate quickly. Additionally, its integration capabilities support reliable assessments of data quality.

The platform also includes built-in metrics to evaluate both privacy protection and the accuracy of the datasets it generates. By producing diverse datasets quickly, Gretel helps reduce the time spent on data preparation, a common bottleneck in machine learning projects.

That said, Gretel does face some challenges. It may not handle very large datasets effectively, which can be a limitation for extensive longitudinal studies. Additionally, its algorithms might pose difficulties for researchers without a technical background.

Even with these limitations, Gretel's focus on privacy, ease of use, and detailed documentation makes it a go-to option for research teams aiming for rapid deployment and developer-friendly tools.

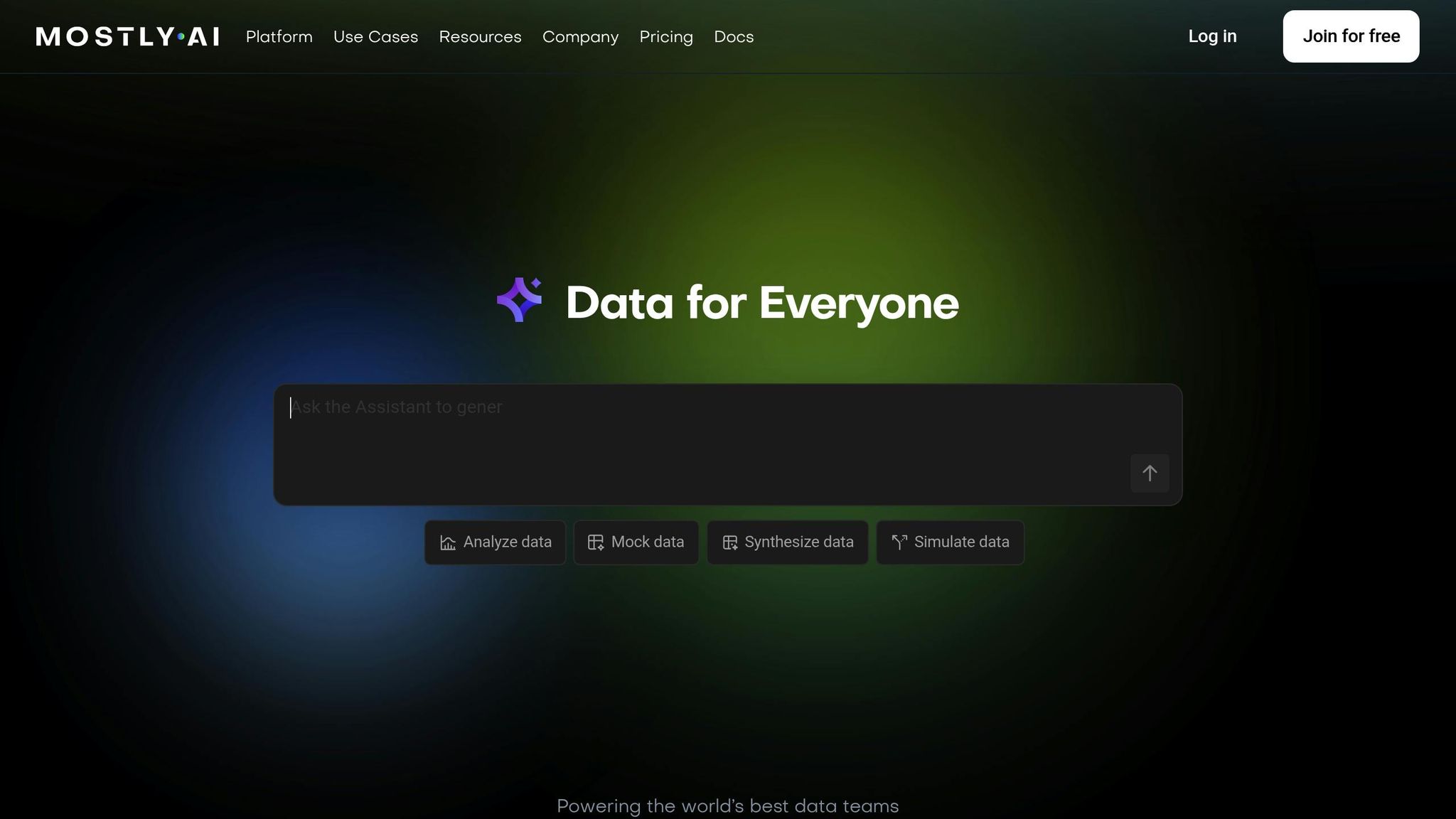

6. Mostly AI

Mostly AI takes a privacy-first approach to synthetic data, offering a platform that transforms real-world production data into synthetic datasets that are privacy-safe yet statistically identical to the original. Using its Generative AI, the platform quickly trains models to replicate the statistical properties of the original data. This ensures that any analysis or insights derived from the synthetic version mirror those of the real data, making it a reliable tool for data exploration while prioritizing privacy.

The platform also simplifies data interaction with its built-in AI Assistant, which allows researchers to explore synthetic datasets using natural language commands. For added flexibility, Mostly AI supports multiple deployment options, including Kubernetes Helm deployment on EKS or EKS Anywhere, ensuring compatibility with private network setups. Collaboration is further enhanced through model sharing, enabling teams to create tailored synthetic datasets from a shared base model.

In real-world scenarios, Mostly AI is particularly effective for research and development, especially in creating mock datasets for AI training and testing. Its precise tabular synthesis ensures that machine learning models trained on synthetic data perform just as well as those trained on the original datasets, making it a powerful tool for AI-driven projects.

sbb-itb-2b2bc16

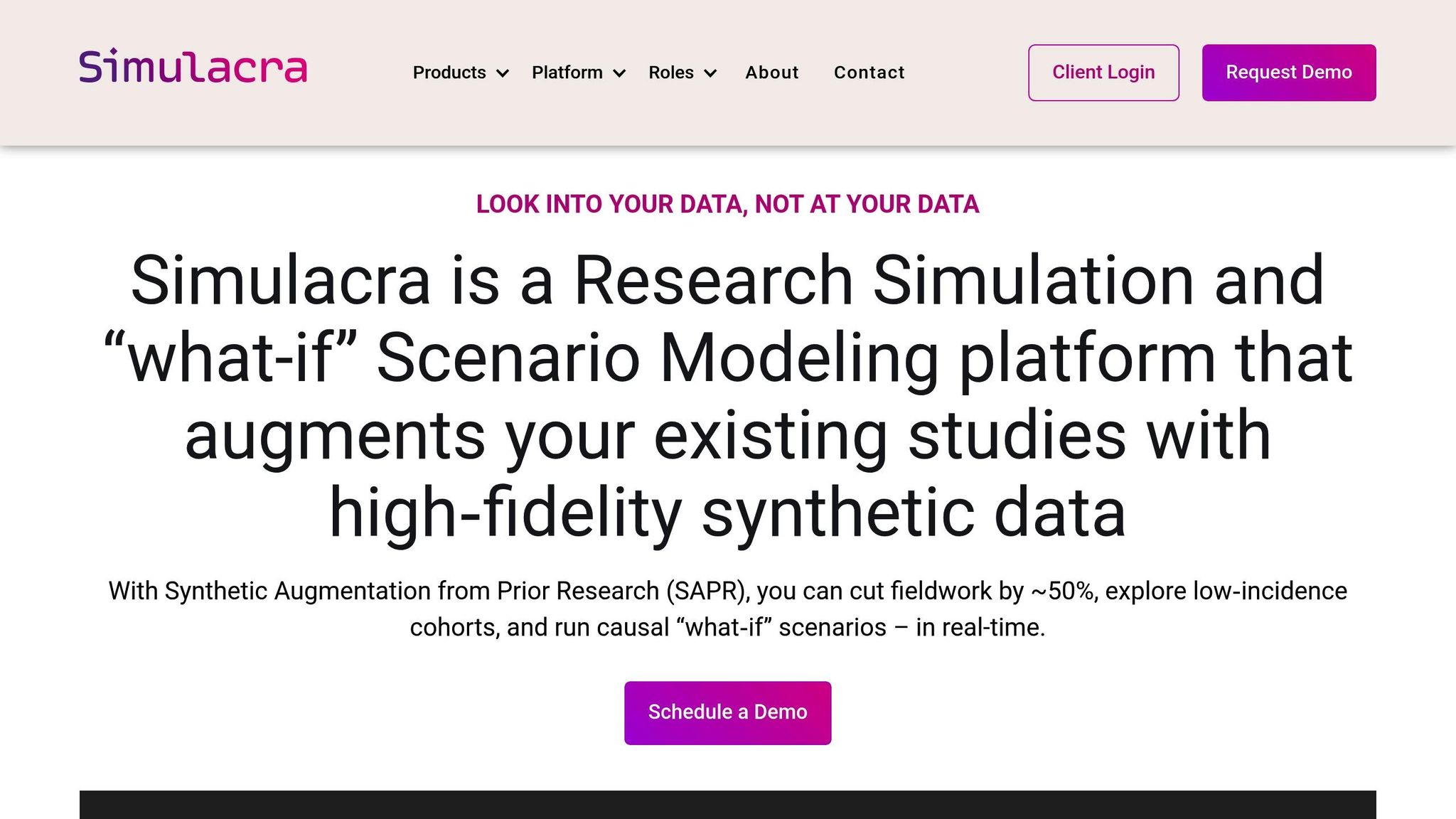

7. Simulacra Synthetic Data Studio

Simulacra Synthetic Data Studio merges Causal AI with high-quality synthetic data to enable real-time scenario modeling. By transforming existing research data into actionable insights, the platform eliminates the need for additional fieldwork, making it a valuable tool for organizations aiming to get the most out of their research investments. This approach reflects the growing shift toward quicker, AI-driven research strategies.

One of its standout features, Synthetic Augmentation from Prior Research (SAPR), allows researchers to cut fieldwork by up to 50%. It’s particularly useful for studying low-incidence consumer groups that are typically expensive or challenging to analyze. With SAPR, teams can run causal “what-if” scenarios in real time, turning static research data into dynamic tools for modeling. This efficiency paves the way for even more advanced data modeling capabilities.

Another key feature is Conditional Generation, which lets users refine synthetic datasets by adding new insights, adjusting demographic details, and setting behavioral parameters. This enables researchers to uncover causal relationships and make accurate predictions based on prior studies - all without conducting additional surveys.

Simulacra has a broad range of applications. Product developers can use it to fine-tune products still in development. Market researchers can quickly extract insights from existing data. Brand managers can identify winning strategies for new product launches, and consumer researchers can breathe new life into old data without the need for fresh fieldwork. Its practical uses extend across industries, including:

- Health and body care: Scenario modeling for optimizing sensory and packaging designs.

- Consumer packaged goods: Pricing and promotional effectiveness analysis.

- Advertising and marketing: Concept and campaign development.

- Political polling: Modeling voter behavior and policy responses.

The platform transforms research workflows, moving away from rigid, traditional methods to more flexible, data-driven exploration. Instead of waiting months for survey results, teams can respond to emerging market trends, dig deeper into patterns, and track shifts in consumer behavior in real time. This agility enables researchers to make quicker, well-informed decisions that align with the pace of today’s markets.

8. Lemon AI

Lemon AI is a synthetic data platform built for research teams that require high-quality, privacy-compliant datasets. Using advanced AI models, it generates synthetic data that mirrors real-world distributions while staying aligned with regulatory standards like HIPAA and GDPR.

The platform supports a variety of data types, including tabular, time-series, and unstructured data, making it versatile for different research needs. With a fidelity rate of up to 90% compared to original datasets, Lemon AI ensures reliable model performance. This makes it a go-to option for research teams working in highly regulated industries.

Privacy is a cornerstone of Lemon AI's design. It uses privacy-preserving algorithms to strip away direct identifiers, ensuring compliance with strict data protection regulations. For instance, a U.S. hospital utilized Lemon AI to create synthetic patient records. These records helped the hospital develop and validate predictive models for patient readmission, improving accuracy while safeguarding patient privacy.

Lemon AI also excels in integration. It offers APIs and connectors for popular tools like Python, R, and Jupyter Notebooks. Users can export data in common formats such as CSV and Parquet, and the platform seamlessly integrates into automated data pipelines for continuous synthetic data generation.

Pricing starts at about $2,500 per month for small research teams as of Q4 2025, with tailored enterprise solutions available for larger organizations. These enterprise packages include dedicated support for more complex needs.

User feedback is overwhelmingly positive, with an average rating of 4.7 out of 5 as of November 2025. However, some users have mentioned that creating highly specialized datasets might require additional assistance from the vendor.

For research teams navigating regulated environments, Lemon AI offers an effective mix of data fidelity, easy integration, and strong compliance, making it a valuable tool in the synthetic data space.

9. brewdata

brewdata is a synthetic data platform designed with privacy at its core. It creates artificial datasets that mimic the statistical properties of real data while stripping away personal identifiers. This makes it a great fit for U.S.-based organizations that need to comply with privacy regulations like HIPAA, GDPR, and CCPA.

What sets brewdata apart is its flexibility. It supports a wide range of data types, including tabular, time-series, and unstructured data, giving research teams the tools they need to tackle various projects. With automated workflows, the platform streamlines dataset creation, cutting down the time spent on tedious data preparation tasks.

The results speak for themselves. Users have reported cutting data preparation time by up to 70% compared to traditional anonymization methods. Plus, when training models with brewdata's synthetic datasets, they’ve seen a 40% boost in accuracy compared to using heavily redacted real data. This is thanks to brewdata's ability to maintain the statistical relationships within the data.

Integration is another strong point. brewdata works seamlessly with popular data science tools, enabling teams to incorporate synthetic data generation directly into their existing workflows. The process - from data creation to validation - can be fully automated without disrupting current operations.

Pricing starts at $499 per month for small teams, with custom options available for larger organizations. The platform has an average user rating of 4.5/5, though some users note that advanced customization may require additional onboarding.

For research teams focused on compliance, brewdata includes detailed audit logs and data lineage tracking, making it easier to meet regulatory reporting and governance requirements. It adheres to major U.S. privacy standards, such as HIPAA for healthcare data and PCI DSS for financial information.

10. Synthera AI

Synthera AI is a newer player in the synthetic data generation space, with limited public information currently available. This lack of detailed documentation means researchers need to carefully evaluate its capabilities based on their specific project requirements.

When considering tools like Synthera AI, it's important to focus on a few critical factors: the ability to produce high-quality, statistically accurate data; how well it integrates with existing research workflows; its compliance with security standards; and whether it can scale effectively for enterprise-level demands.

Because there's not much documentation on Synthera AI yet, research teams should take a cautious approach. This includes requesting product demos, reviewing any available case studies, and running small-scale pilots to test the tool's reliability and performance before committing to larger projects. Taking these steps can help ensure the tool meets the necessary standards for your research needs.

Tool Comparison Table

Below is a detailed comparison showcasing Syntellia's standout features in the synthetic data space. When selecting a synthetic data tool, it's essential to weigh factors like functionality, cost, and privacy measures. Here's how Syntellia performs across key areas:

| Tool | Primary Use Cases | Key Strengths | Privacy Protection | Pricing Model | Research Turnaround |

|---|---|---|---|---|---|

| Syntellia | Consumer insights, employee research, policy analysis | 90% accuracy, real-time iteration, unlimited audience access | Full privacy protection - no real respondents involved | Annual SaaS subscriptions offering 90% cost savings compared to traditional research | 30–60 minutes |

This table highlights how Syntellia's AI-driven platform reshapes research by combining speed, accuracy, and cost efficiency. Its ability to deliver actionable insights without compromising privacy marks a significant step forward in modern research methodologies.

Conclusion

The tools highlighted earlier showcase how synthetic data is reshaping the landscape of modern research. By integrating synthetic data into workflows, researchers can streamline processes, enhance privacy safeguards, and reduce expenses - all while adhering to strict data protection laws.

Platforms like Syntellia, along with others, are setting new benchmarks for research standards. They allow organizations to conduct studies more efficiently by utilizing artificial datasets that preserve statistical accuracy without exposing sensitive information.

As shown in the tool comparison table, selecting the right synthetic data tool requires careful consideration of factors like accuracy and robust privacy protections. Whether your research focuses on consumer behavior, workforce analysis, or policy development, aligning the tool's capabilities with your specific needs is essential for achieving meaningful results.

With synthetic data technology advancing rapidly, adopting these solutions can lead to quicker insights, cost savings, and confidence in regulatory compliance.

FAQs

How do synthetic data tools comply with privacy laws like CCPA, GDPR, and HIPAA?

Synthetic data tools play a key role in meeting privacy laws like CCPA, GDPR, and HIPAA by generating datasets that look and behave like real ones - without relying on actual personal or sensitive information. This method ensures that private data remains protected while still allowing datasets to be used for analysis and decision-making.

The beauty of synthetic data lies in its design. It mirrors the statistical patterns of real data without directly copying it, making it a practical solution for organizations facing strict privacy regulations. These tools are especially important in fields like healthcare, finance, and research, where safeguarding sensitive information is a top priority.

What are the main advantages of using synthetic data tools over traditional research methods?

Synthetic data tools offer a range of advantages compared to traditional research methods. One major benefit is their ability to protect sensitive information. These tools create data that reflects real-world patterns and scenarios without revealing actual personal or confidential details, ensuring privacy remains intact.

Another standout advantage is the time and cost savings they provide. Researchers can generate synthetic data quickly, cutting down on lengthy research timelines and reducing expenses. This allows for faster analysis and decision-making.

With synthetic data, researchers can efficiently test strategies, validate ideas, and refine processes with precision - all without the logistical hurdles and high costs tied to traditional data collection methods.

What factors should researchers consider when choosing a synthetic data tool for their projects?

Choosing the right synthetic data tool starts with understanding your project’s unique needs. Think about the specific type of data you need to generate - whether it’s consumer insights, employee feedback, or policy analysis. Also, consider how precise the data must be to meet your objectives. Compliance with data privacy laws and seamless integration with your current systems should also be top priorities.

When comparing tools, take a close look at their features. Is the platform user-friendly? Can it handle large-scale tasks efficiently? How quickly does it deliver results? For instance, tools like Syntellia stand out for their AI-driven insights, offering both high accuracy and fast turnaround times - perfect for projects with tight deadlines. By matching the tool’s strengths with your goals, you’ll be well-positioned to make the right choice.